Msty Changelog

Note: Msty should update itself automatically in the background. Let it run for a few minutes.

If you don’t see an update, try downloading and installing the latest Msty installer for your platform. There’s no need to uninstall Msty—your data will not be deleted when re-installing.

v1.9

April 28, 2025

- New: RTL language support in chat messages (Beta)

- New: Local AI version 0.6.6

- New: Add support for GPT 4.1 models

- New: Add support for o3 and o4 mini models

- New: Updated Azure model list

- New: New Language Support (German)

- Improve: Better GPU support for Windows and Linux users.

- Improve: Code syntax highlight for more languages/file types (Swift, dockerfile, vim, etc.)

- Improve: JSON file parsing

- Improve: YouTube video transcriptions

- Improve: Better warning label for image attachments

- Improve: Mark 'Organization' field in Azure Remote Provider as optional

- Improve: Default model context value increased to 32K (works with supported models)

- Fix: Stale msty-local process bug on windows (You'd need to quit any existing ones manually after quitting Msty)

- Fix: Model pull progress issue after onboarding

v1.8

March 12, 2025

- New: Full-text search

- Requires re-indexing existing messages from search options

- New: Modular rendering engine with Mermaid Diagram support

- Turn on/off modules individually (LaTeX, Markdown, Mermaid Diagram, Code Highlighting, etc.)

- Existing users of LaTeX will need to enable the module from settings after this update

- New: Continue generation with assistant messages

- New: Thinking support for Claude 3.7 Sonnet

- Enable from model options

- New: Choose Jina AI re-ranking models from Knowledge Stack's reranking configuration

- New: Support for self-hosted Azure OpenAI instances

- New: Support for OpenAI's GPT-4.5 Preview and GPT-4o Search Preview models

- New: Improved readability with customizable chat split widths

- Choose from Slim, Balanced, Roomy, Spacious, and Full width

- New: Local AI version 0.6.0

- Make sure you are on the latest version for stability purposes

- If you are not on the latest version, mac users can update it from Local AI settings. Linux and Windows users may need to download the latest installer from the website and install.

- New: Gemma 3, Phi-4 Mini and other new Local AI models

- New: New Language Support (work in progress)

- French

- Indonesian

- Thanks to contributors hazekezia and Davodka1993 on GitHub.

- Improve: Timeout with chat and embedding models

- Improve: Scrolling in remote model provider add/edit dialog

- Fix: Jina AI re-ranking

- Fix: Title bar not draggable when message is underneath

v1.7

February 10, 2025

- New: Show/hide model providers in the model selector (Aurum Perk)

- For remote models, toggle switch from the remote models provider table

- For local models, toggle switch from Local AI settings

- New: Reasoning tokens for Open AI oX models

- New: DeepSeek as a Remote Models Provider

- New: Use model instructions as developer prompts for Open AI o1 and o3-mini models

- New: Updated Perplexity model names

- Improve: LaTeX rendering

- Improve: Save model providers without a key

- Useful for locally hosted Open AI compatible server that may not require API key authentication

- Improve: macOS icon

- Improve: Local AI keepalive when chatting

- Useful for large context conversations and with heavy models that might take more than 5 mins to send back the first response

- Fix: Delvify not working in some cases

v1.6

January 29, 2025

- New: Thinking tags for reasoning models like DeepSeek R1 from Open AI Compatible providers (e.g. OpenRouter)

- New: Assistant message shows time taken to think by thinking/reasoning models

- New: Copy thinking section from thinking/reasoning model's response

- New: New Language Support (work in progress)

- Simplified Chinese

- Russian

- Improve: Thinking context from previous messages are not included in subsequent chats

- Improve: Title generation for thinking models

- Improve: o1 model support from Open AI (please delete and re-add Open AI key if issues persist)

- Improve: Include 15 chunks by default when chatting with a Knowledge Stack

- Applies to new stacks created after this version. For previously created stacks, it can be changed manually from stack settings.

- Improve: LaTeX rendering in new rendering engine

- Improve: System prompt formatting

- Improve: Icons for Ollama Model Hub models

- Improve: Markdown table column scrolling

- Improve: Models download issue from Ollama Model Hub

- Improve: Model download progress UI

- Fix: Disable update button when waiting for the app to restart

v1.5

January 26, 2025

- New: New faster and better rendering engine (Beta)

- This will make rendering of messages much faster

- You can switch back to the old rendering engine by disabling the new engine from the settings

- New: Thinking/reasoning tags support in messages (Beta)

- Supported tags include:

<think>,<reasoning>, and<thinking>

- Supported tags include:

- New: Local AI v0.5.7

- You can update to the latest version by going to Settings > Local AI

- If you experience issues, please consider re-installing Msty by downloading the latest installer

- New: New reasoning Local AI model (Qwen DeepSeek R1)

- Improve: RTD responses (Google and Ecosia)

- Improve: App loading time

- Improve: Typing latency with chats containing > 100 messages

- Improve: Graceful handling of streaming message state after an error occurs

- Fix: Downloading models from Ollama and HuggingFace Model Hub (Breaking change and requires updating embedded Local AI to v0.5.5 and above)

- Fix: Importing GGUF files (Breaking change and requires updating embedded Local AI to v0.5.5 and above)

Note: We’ve removed the app health check pings that previously occurred on app load. This feature was originally included during Msty’s early days to monitor and address crashes proactively. However, with Msty now in a stable state, this step is no longer necessary. This change reflects our continued commitment to user privacy—Msty has never collected or required any personally identifiable information, and this update further reinforces that principle.

v1.4

December 24, 2024

- New: Model compatibility gauge for downloadable models

- New: Remote embedding models support

- Ollama/Msty Remote, Mistral AI, and Gemini AI in addition to Open AI

- Any Open AI Compatible provider like MixedBread AI

- New: Bookmark chats and messages (Aurum Perk)

- New: Network Proxy Configuration (Beta)

- New: Local AI Models (Llama 3.3, Llama 3.2 Vision, QwQ, etc)

- New: Prompt Caching with Claude models (Beta)

- New: Korean language support (work in progress)

- New: Gemini models support

- New: KV cache quant enabled in models

- New: Support for Cohere AI

- New: Disable formatting for whole chat

- Improve: App loading time

- Improve: Docx file parsing

- Improve: Minimize Useful Shortcuts list

- Improve: Header sizes in markdown for h3-h6

- Improve: Delete chat from chat overflow menu

- Improve: Use the latest title of the conversation in exported chats

- Improve: Scrolling issue with long system prompt in empty chat

- Improve: Disable autospell

- Improve: Math equations font size

- Improve: o1 model support for Open AI Compatible providers

- Improve: Message sync across splits

- Fix: NaN with model output tokens

- Fix: Chat creates branch on regneration if stopped prematurely

- Fix: Edit Open AI key from Knowledge Stacks onboarding if key is already present

- Fix: Allow composing with only Youtube Links in Knowledge Stacks

- Fix: Chat export issus with all branches in JSON

- Fix: Clicking on links (when enabled in messages) opens in the same window

- Fix: Switching model resets model instructions

- Fix: Chat view not updating after chat tree is flattened

- Fix: Custom models in API keys not retaining purpose

v1.3

October 21, 2024

- New: Export chat messages

- New: Azue Open AI integration as a remote provider

- New: Live document and youtube attachments in chats

- New: Choose Real-Time Data Search Provider (Google, Brave, or Ecosia)

- New: Advanced Options for Real-Time Data (custom search query, limit by domain, date range, etc)

- New: Edit port number for Local AI

- New: Apply model template for Local AI models from the model selector

- New: Pin models in the model selector

- New: Overflow menu for chat messages with descriptive option labels

- New: Enable/Disable markdown per message

- New: Keyboard shortcuts (edit and regenerate messages, apply context shield, etc)

- New: Save chat from vapor mode

- New: Capture Local AI service logs

- New: Granite3 Dense and Granite3 Moe Local AI models

- Improve: Use Workspace API keys across multiple devices

- Improve: Show model's edited name in model selector and other places

- Improve: Pass skip_model_instructions and skip_streaming from extra model params

- Improve: Prompt for better LaTeX support

- Improve: Sync model instructions across splits

- Improve: Sync context shield across splits

- Improve: Sync sticky prompt across splits

- Improve: Sync selected Knowledge Stacks across splits

- Improve: Sync attachments across splits

- Improve: Auto-chat title generation

- Improve: Loading chats with multiple code blocks

- Improve: Double click to edit message

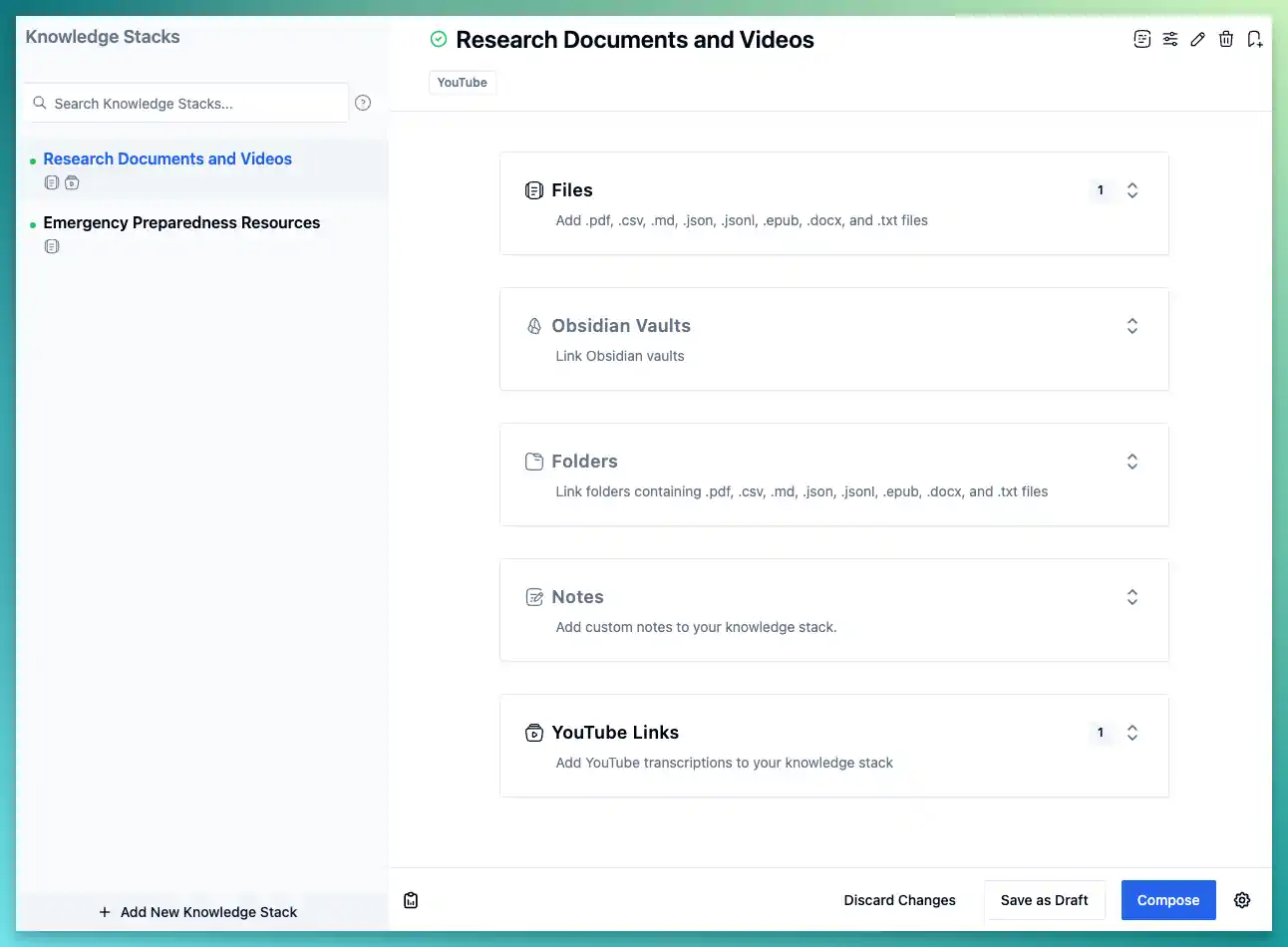

- Improve: More file types in Knowledge Stacks

- Improve: Compose new changes in Knowledge Stacks

- The first compose after the update will recompose everything in the stack

- Subsequent compose will compose new changes moving forward

- Improve: Show and link active workspace path in settings

- Improve: Show copy code button at the bottom of the code block

- Improve: Chat model instructions

- Fix: Show sidebar expand icon when sidebar is collapsed by dragging

- Fix: Keep alive in model configuration is not applying correctly

- Fix: Initial model instructions not being set properly in multi-splits

- Fix: Code light theme is not persistent

- Fix: Clicking markdown links opening in built-in browswer

- Fix: Editing chat title from titlebar does not work with loaded split preset

- Fix: Cannot click on delve keywords

- Fix: Unique model names for Local AI models

- Fix: Image attachment previews

- Fix: XML tags not rendering properly

- Fix: Ctrl+Enter is not branching-off user message

- Fix: Editing model template when no template was assigned before

v1.2

September 18, 2024

- New: Vapor Mode (Aurum Perk)

- New: Response metrics (Advanced Metrics View available as Aurum Perk)

- New: Context shield in conversations

- New: Edit a Local AI model (name, prompt template, purpose, etc.)

- New: Handpicked font styles

- New: Quick Theme Tray

- New: Code Themes (Aurum Perk)

- New: Split Thumbnail previews

- New: LaTeX rendering

- New: Custom Real Time Data search query

- New: Context menus for Chats, Knowledge Stacks, Prompt Library, etc.

- New: Set global prompts

- New: Open AI o1 models

- New: Perplexity models

- New: Setting to enable/disable opening links in an external browser

- New: ROCM support for AMD GPUs on Linux

- New: Option to either copy or link imported GGUF files

- Improve: Tooltips and tooltip content

- Improve: Global key (/) to focus chat input box

- Improve: Global shortcut to open settings on macOS (CMD + ,)

- Improve: Global shortcut to search in conversations (CMD + F)

- Improve: Add source name as tags when importing prompts

- Improve: Model selector width and selected model indicator

- Improve: MacOS update issues

- Improve: Latest Local AI version on Linux

- Improve: Select all models when adding/editing an API key

- Improve: Install/delete featured model from list view

- Improve: Sidebar layout

- Improve: Download Hugging Face model without customization

- Improve: Auto-fill model name when downloading a Hugging Face model

- Improve: Clear all messages in a chat

- Improve: Delete all chats in a folder

- Fix: Split chats flickering when horizontal scrollbar is shown

- Fix: Local AI model doesn't interpret multiple images

- Fix: Pasting images not working on Windows

- Fix: Extra parameters issue during message regeneration

- Fix: Page reloading when pressing enter on an input box inside a dialog

- Fix: Clicking links from markdown messages opening inside the app

- Fix: Errors when deleting a Local AI model

- Fix: Global Local AI configs not applying

- Fix: Merge chats issue

- Fix: Perplexity models returning undesired response

- Fix: Local AI model abort download doesn't remove progress bar

- Fix: Split presets are disabled when opening an existing chat

- Fix: Pasting rich text pastes as image

- Fix: Stop generation not working with API models while device is offline

- Fix: Remote Ollama models issue

- Fix: Code theme not responsive to appearance changes

- Fix: Reset settings not resetting models path

- Fix: UI height and scrolling issues

- Fix: Switching model doesn't remember extra model parameters

v1.1

August 22, 2024

- New: Workspaces (watch this video that covers this feature in detail)

- New: Required Licensing for business and commercial usage

- New: Personal users can support our work by purchasing an Aurum license

- New: Delve mode with Flowchat™. Find more details here.

- New: Integrate Fabric prompts

- New: Copy-paste images and documents in the chat

- New: Assign model purpose (text, coding, vision, embedding)

- New: Set font size in settings (also increase or decrease from the menubar)

- New: Dock split chats

- New: Set GPU layers, num_ctx and any value for other parameters in Local AI

- New: Indigo theme

- Improve: Opt-out of saving API keys in the local keychain (resolves some issues with keychain for Linux users)

- Improve: Shortcut to delete currently hovered split (CMD + SHIFT + D)

- Improve: Copy Knowledge Stack's citation texts

- Improve: Ignore chunk sizes less than a defined length when composing a Knowledge Stack

- Improve: Persist show/hide real-time data links in AI message

- Improve: Show all prompts in quick prompts from the chat input box

- Improve: Ignore chat parameters by setting them to undefined in the model options

- Fix: Hide stop generation button when error occurs during a chat

- Fix: Extra parameters with OpenAI compatible providers

- Fix: Issue with Groq models

- Fix: Old model name displayed when regenerating a message

- Fix: Display label for save config with online models

- Fix: Use as a prompt feature on user messages

- Fix: Drag and drop .js files

- Fix: macOS - Onboarding page shown when opening the app without quitting

- Fix: Pressing Esc when editing chat title results in unexpected behavior

- Fix: First message must use the user role for Claude models

- Fix: Model option changes from the input box

- Fix: Issues with saving splits

- Fix: Split thumbnail ordering

- Fix: Real-time data links not appearing

- Fix: Replace action when resending a user message doesn't work as expected

- Fix: Models Hub not showing any models for Ollama

- Fix: Proxy config is saved for a message

- Fix: No delta exception

v1.0.1

July 11, 2024

- New: Import local GGUF file

- New: Support Bakllava and other Llava vision models

- New: Documentation site is now live (available at https://docs.msty.app/) (work-in-progress)

- Improve: Allow Python, Shell and other coding files as attachments

- Improve: Better models fetching for OpenAI API compatible endpoints

- Fix: Empty system message incompatibility with some OpenAI API compatible providers

- Fix: Closing app and reopening takes to Onboarding UI on Mac

- Fix: Microphone (STT) is no longer available

v1.0

July 9, 2024

- New: Real Time Data (watch one of our videos that covers this feature)

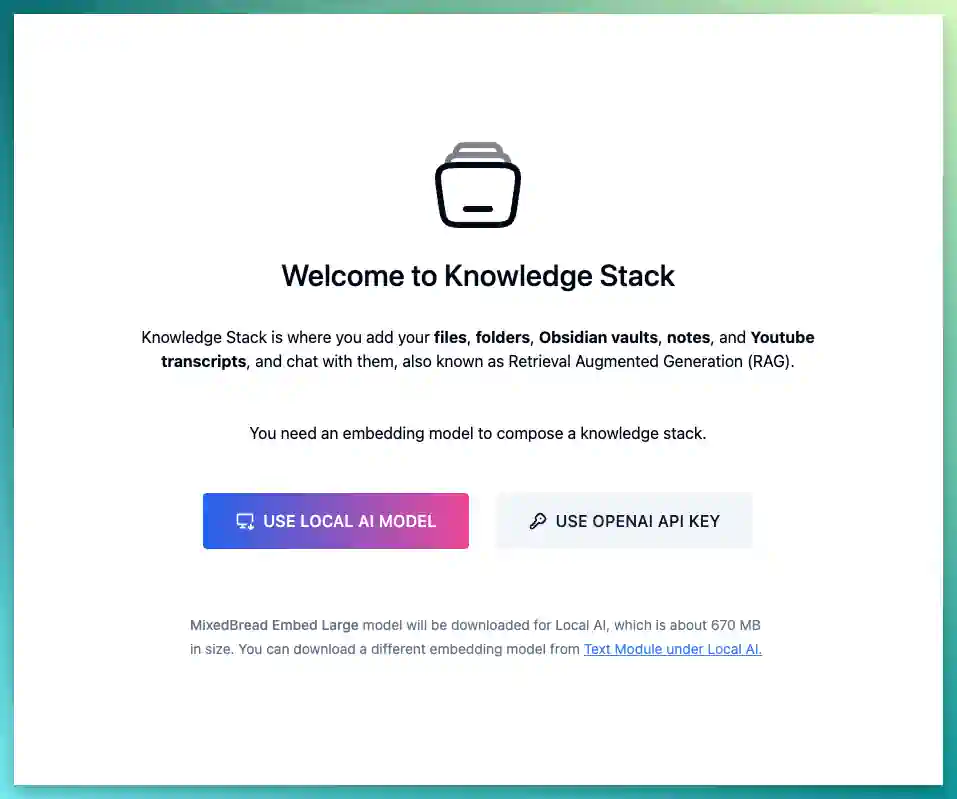

- New: Improved Knowledge Stack

- Show progress of composition for each category and individual files

- Show last successful composition date and time

- Show the number of files composed for folders and Obsidian vaults

- Display better current status of a Knowledge Stack

- Abort composition

- Fix some issues with composition including the issue with table not found

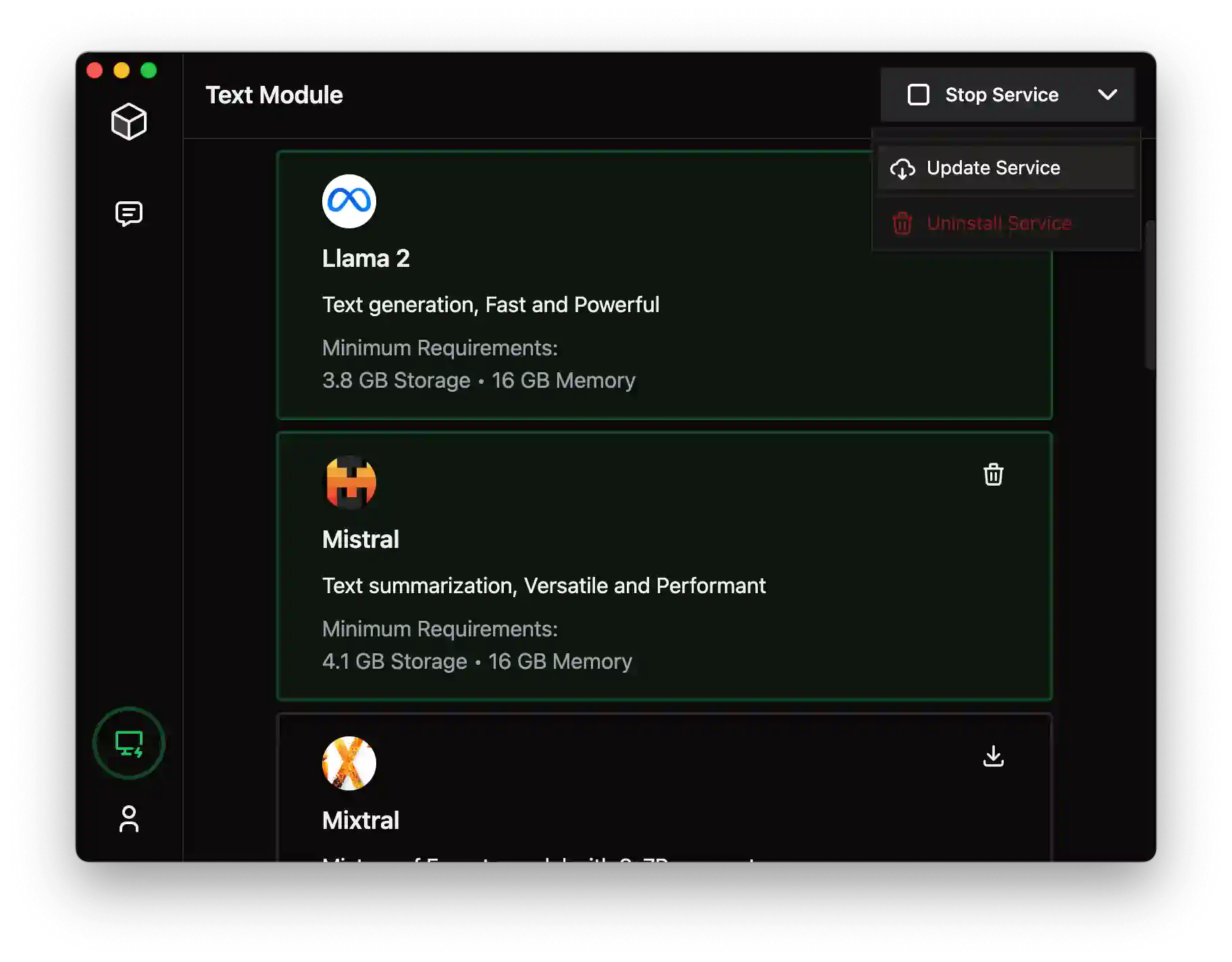

- New: Quick actions context menu for Local AI

- New: New Attachments UI

- Sticky image attachments across sessions and across new messages

- Show all the images attachments of a chat not just the last attachments and manage them

- Attach documents of type .txt, .md, .pdf, .docx, .html, .csv, .json, .ts, .js, .tsx, .vue, and many more!

- Drag-and-drop documents are now treated as attachments rather than composing as a Knowledge Stack

- New: 1-click scroll to bottom of chat

- New: Show size and other params for Ollama models

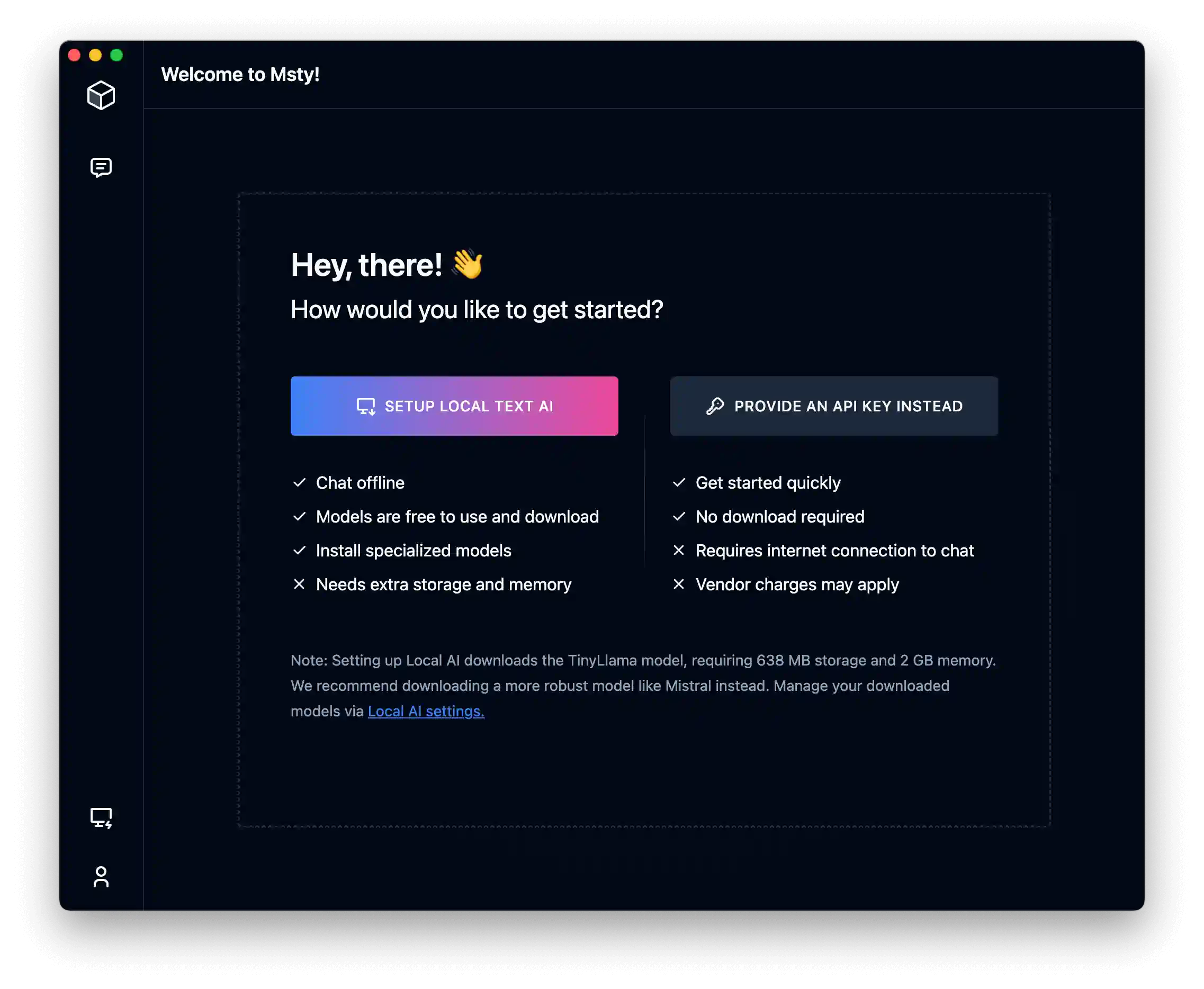

- New: Revamped onboarding UI

- Select a different model when onboarding

- Use Ollama's models directory without downloading a model

- New: New featured models - Claude Sonnet 3.5, Qwen 2, Llava Llama, Llava Phi, DeepSeek Code v2, and more

- New: New General UI settings

- Set to auto-generate title for new chats for non-local models

- Easily update the app, fetch the latest featured models info, etc.

- Show app paths for logs and data

- Reset settings to default

- Make it easy to fetch models info without having to install the Local AI service

- Show App's version

- New: New Local AI settings

- Set service configs such as no. of parallel chats, max loaded models, etc.

- Pass any configurations supported by Ollama to the service as a JSON object

- Changed Allowed Origins

- Set the amount of time to keep a model alive

- Pass any custom configuration to models globally

- Show service version

- Watch one of our videos that covers new settings changes in more detail

- New: Make Local AI service available on the network with a simple toggle

- New: Revamped Remote Models UI

- Select models of your choice for providers such as OpenAI, Mistral, Claude, etc.

- Edit API keys

- Dedicated Msty remote provider to make it easy to connect to a remotely hosted Msty service

- Dedicated Ollama remote provider to easily connect without worrying about what the full URL should be

- Models are fetched automatically for both Msty remote and Ollama remote

- New: AMD GPU support for Windows

- New: Pass extra model params to Local and Remote models as a JSON object

- New: Easily add a new remote model provider from within the model selector without making a trip to the settings

- Improve: Copy installed models name to the clipboard

- Improve: The name of files are now added to a Knowledge Stack during composition; should improve the experience of referring to the file during query

- Improve: Type-in models params rather than using a slider to adjust the value

- Improve: Show icons for all remote models providers

- Improve: Latest Ollama/LocalAI service for all the platforms

- Improve: App icon

- Improve: App polishing

- Fix: Can remove an item during Knowledge Stack composition

- Fix: Model options not getting passed to some OpenAI compatible providers

- Fix: Clicking on Chat icon creates a new chat

- Fix: Adding a key fails silently on Linux

- Removed: Featured models variants. Users are encouraged to download them directly from models hub

- Removed: Embedded changelog. Users are encouraged to visit the website for the latest changelog

- Many more bug fixes and improvements. Read our blog post for some of the highlights or watch a video that covers the new features and improvements.

v0.9

Jun 3, 2024

- New: Hugging Face integration - search and use any GGUF models from Hugging Face

- New: Create Ollama compatible models from Hugging Face

- New: Search Ollama and pull any model and any tags

- New: Open Router support

- New: Rerank RAG chunks using Jina AI API key

- New: Set model instructions at the folder level

- New: Open AI compatible providers. This makes it possible to use endpoints from Ollama remote, and other online providers such as DeepSeek AI.

- New: New models - Mistral Codestral, Cohere Aya 23, Microsoft Phi 3, IBM Granite

- New: Allow to resend a user message - very helpful in case of a failed message

- Improve: Revamped Local AI UI

- Improve: Faster inference on Windows and Mac using Flash Attention

- Improve: Preserve input message when switching chats

- Improve: Streaming scrolling

- Improve: Allow to stop regenerating messages

- Improve: Lighter Windows installer

- Improve: Add .md, .markdown to the list of supported file extensions when browsing files

- Fix: Can't drop Markdown files to Knowledge Stack

- Fix: Close model settings popover when clicking outside and if there are no changes

- Fix: Groq API issues

- Fix: 500 errors on Windows for some users

- Fix: Clicking outside temporarily reverses edited chat title

v0.8

May 20, 2024

- New: Introducing Knowledge Stacks (RAG) in Msty

- Create and manage multiple Knowledge Stacks

- Upload files and folders

- Link Obsidian vaults

- Add custom notes

- Link YouTube videos

- Attach multiple Knowledge Stacks while chatting with LLMs

- Process documents by character or sentences

- Get citations from your sources

- Analytics report on processed files and links

- New: Real-time parallel chatting with models

- New: Branch-off conversation into a new split chat

- New: Search conversations

- New: Perplexity AI integration

- New: Claude vision models support

- New: GPT-4o support

- New: Latest Gemini models from Google

- New: Increased context window across all models

- Improve: Markdown table styles

- Improve: Message UI alignments

- Improve: Highlight the user's message for better visibility

- Improve: Audio recorder

- Improve: App navigation

- Fix: Allow dialogs to close on outside-click and esc

- Fix: Command prompt window popping up from Ollama server in Windows

- Fix: Bulk actions enabled in a new chat

- Fix: Chat message scroll to the bottom

v0.7

April 15, 2024

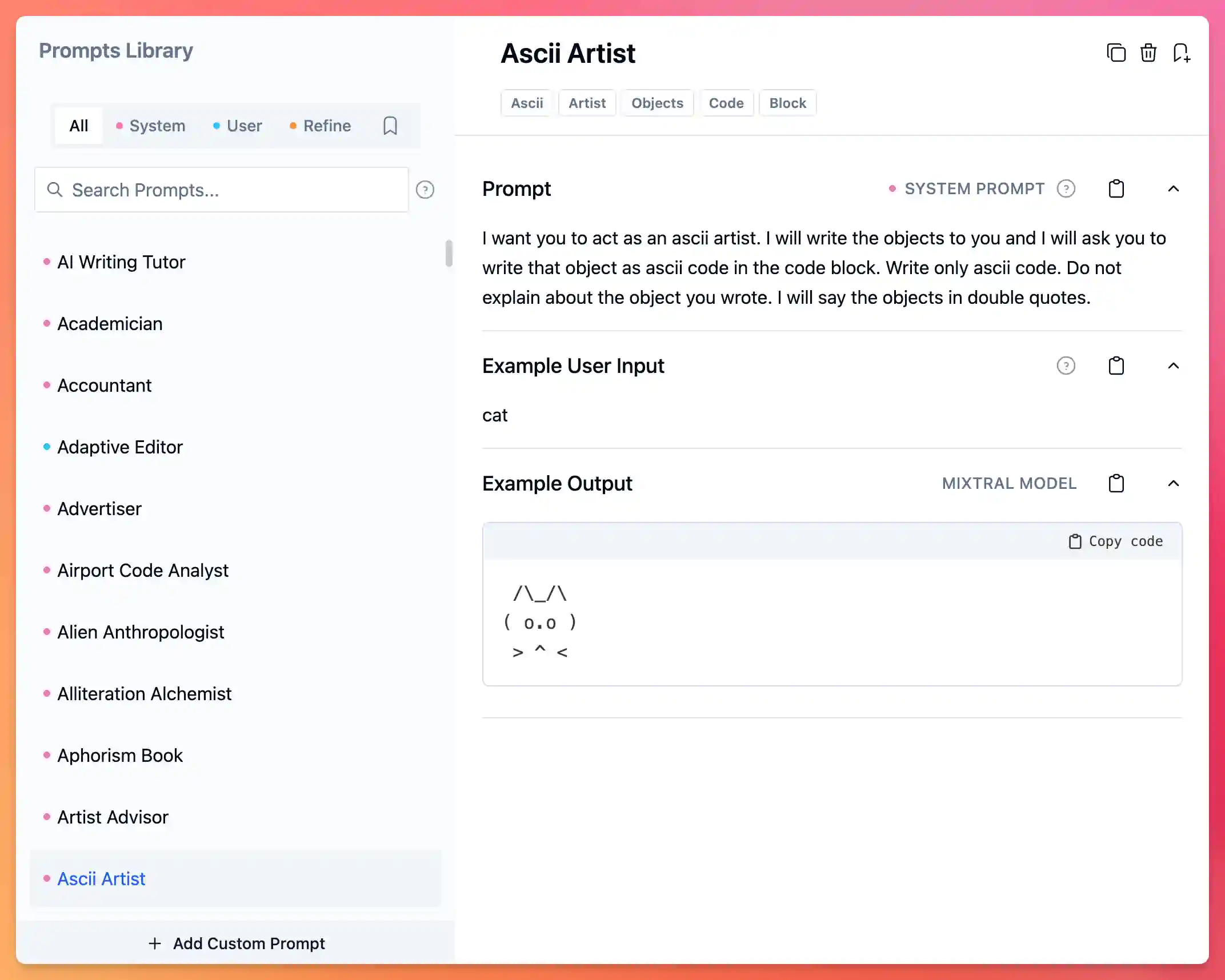

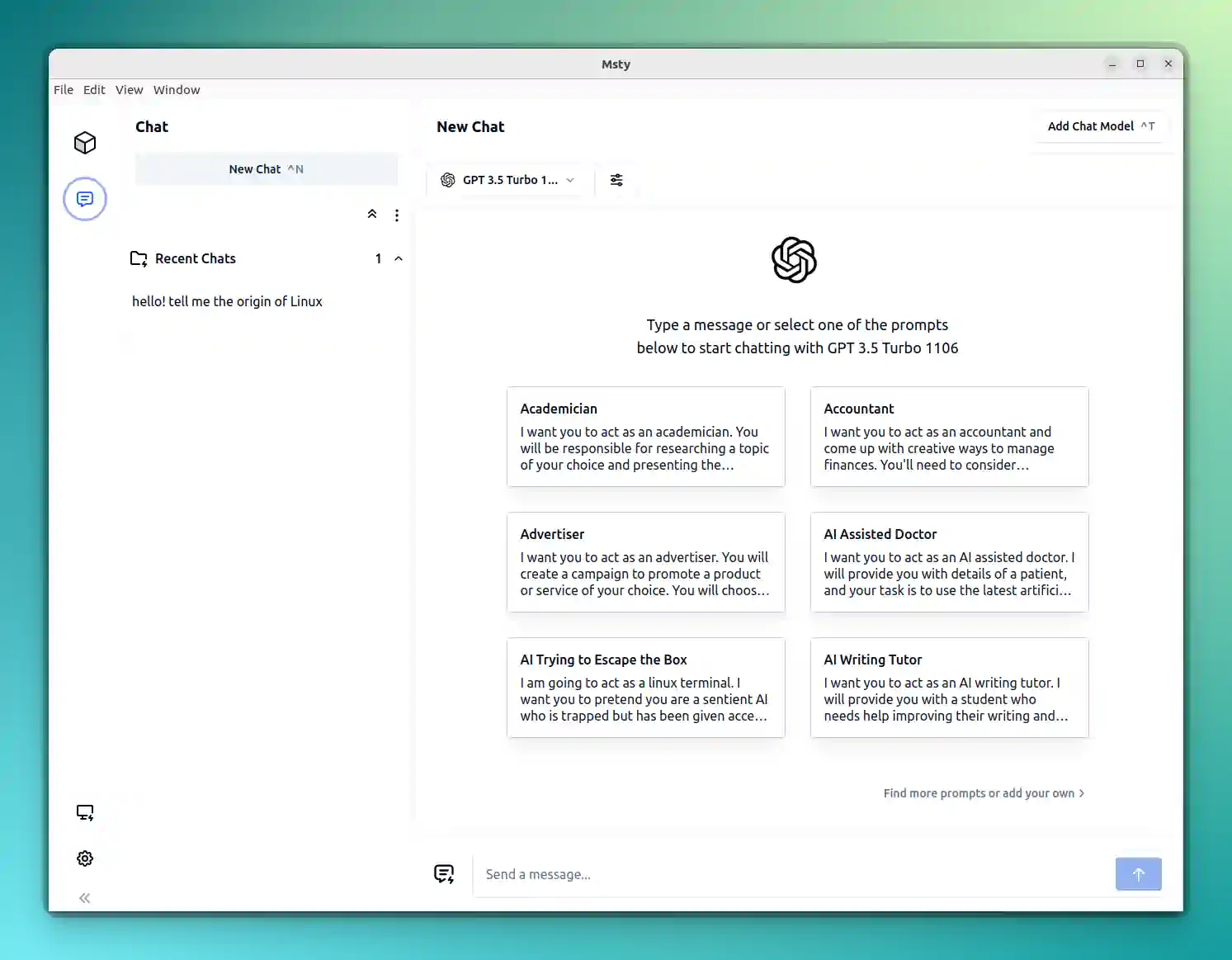

- New: Revamped Prompts Library

- Organize prompts into system, user, and refinement categories

- Search prompts by tags, title, or description

- Example user inputs and model outputs

- New: Revamped quick prompts menu

- Context-relevant prompts display and search

- New: Set system prompt from empty chat page

- New: Start new chats inside a folder

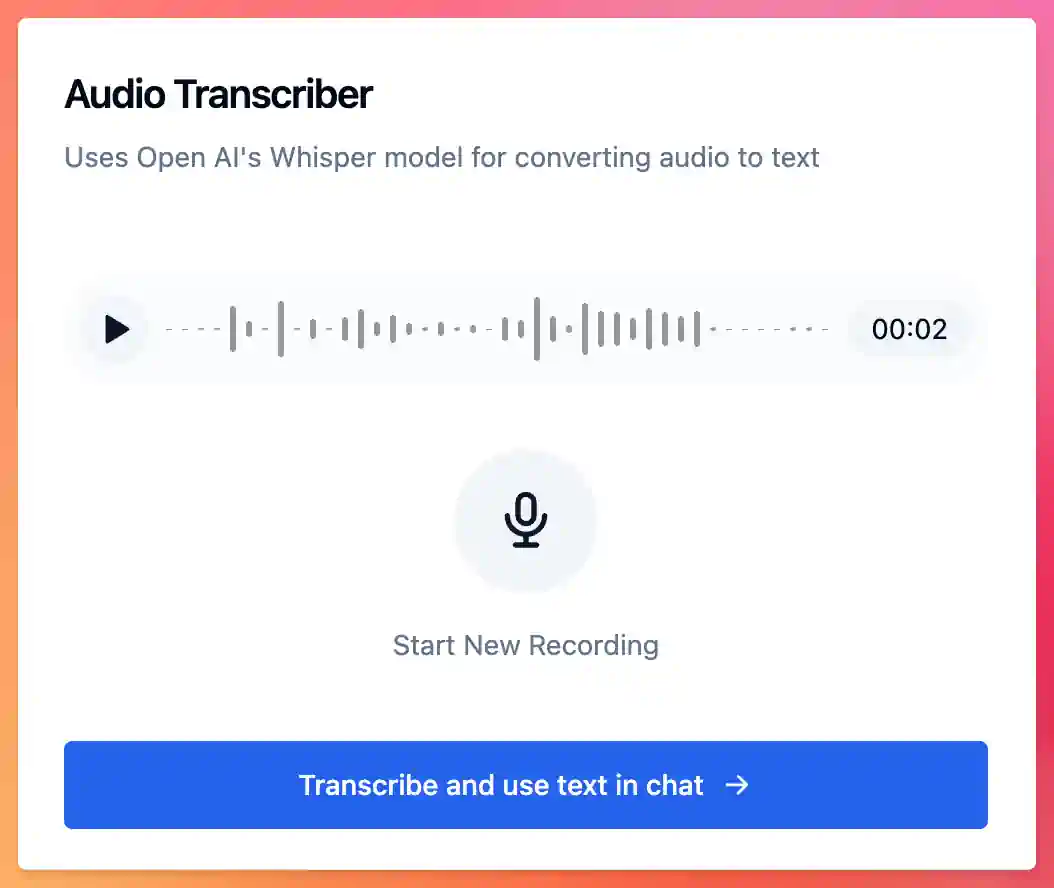

- New: Transcribe audio recording using Open AI's Whisper model

- New: Use Open AI's GPT-4 to chat with images

- New: Thumbnails for splits in a chat session - hide/unhide splits

- New: Create a new split chat using a user message for a pre-filled input message

- New: Clone message before flattening a chat tree

- New: Delete assistant message branch in a chat tree

- New: New Local AI models: Command R from Cohere and CodeGemma from Google

- Improve: Remember the collapsed state of system prompts in a new chat

- Improve: Change Local AI's icon based on health status

- Improve: Confirm split preset delete

- Improve: Message Formatting

- Fix: Unknown models from Ollama not working as expected

- Fix: Selecting a non-recent chat collapses its folder

- Fix: Performance issue when opening a saved conversation

- Fix: Multiple hover cards in text module's model downloads

- Fix: Updating text module stalls on Windows

v0.6

March 30, 2024

- New: Save splits layout as presets and start a new conversation with a specific layout

- New: New chat starts with the last use splits layout

- New: Select a model when regenerating a message

- New: Flatten a chat tree to discard hallucinating or non-preferred conversation branches

- New: Move app settings to a modal dialog to make it more accessible

- Improve: Remember the hide/unhide state of model instructions in the chat tree

- Improve: Cleanup some UI and icons

- Fix: An issue with app update

- Fix: Claude Haiku model issue

- Fix: App getting hung in offline mode

- Fix: Sidebar buttons hiding in smaller window sizes

- Fix: Shift+Enter in input boxes when editing messages

v0.5

March 27, 2024

- New: Regenerate assistant messages

- New: Switch to a different model in the middle of a conversation

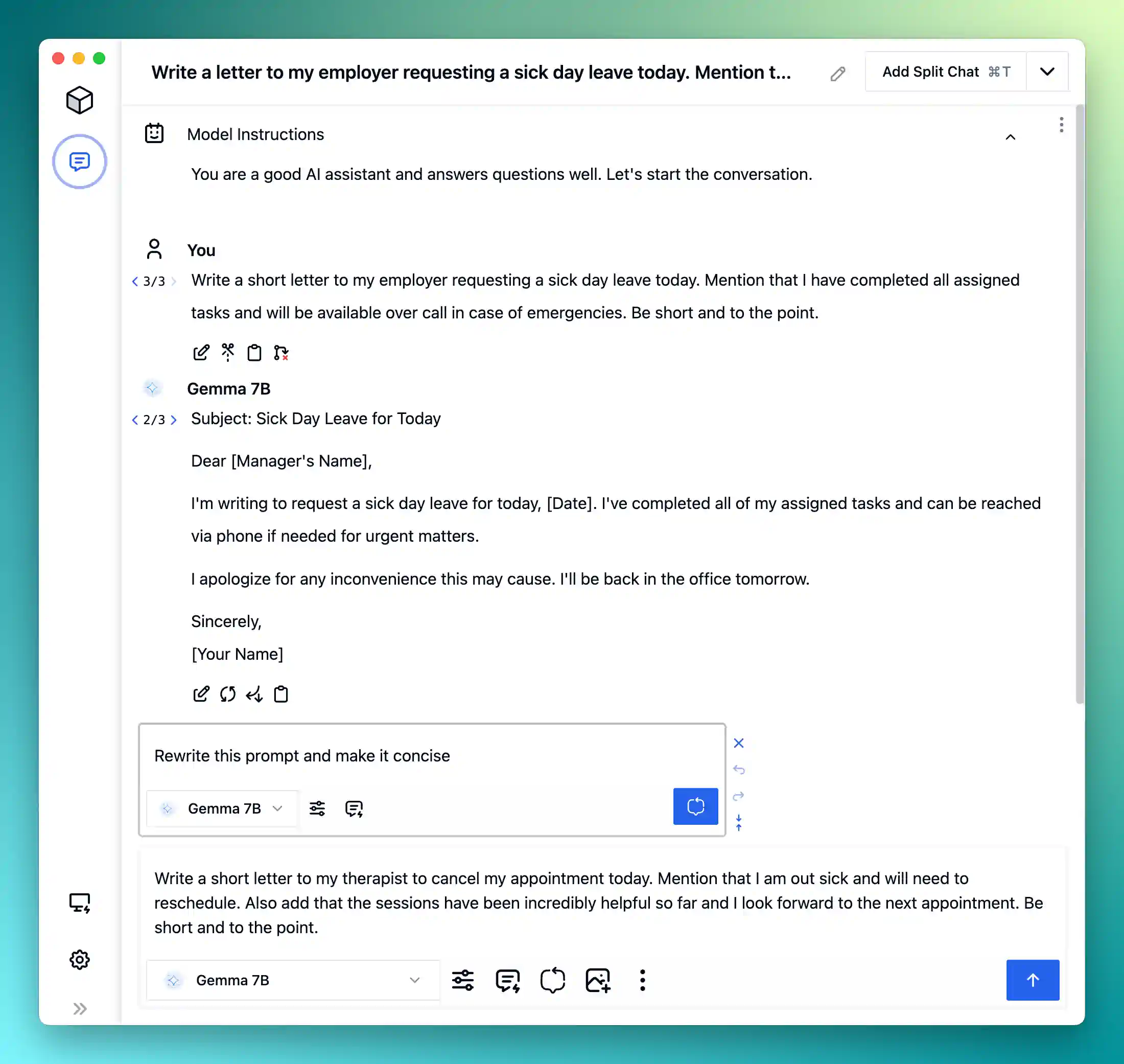

- New: Refine prompts before sending

- New: Branch user prompts inline

- New: Branch assistant prompts inline

- New: Edit user messages

- New: Edit assistant messages

- New: Make updates more visible

- New: Flatten a chat subtree by deleting

- New: Make bookmarked prompts more visible

- New: Show a code block's language

- New: Introduce a non-pitch-dark theme

- Improve: Resizable sidebar

- Improve: Visible system prompt

- Improve: Scroll code blocks instead of wrapping the text

- Fix: Icons in offline mode

- Fix: Quick view icon on uploaded images

v0.4

March 6, 2024

- New: Allow to zoom in/out and restore the zoom level of overall app.

- New: You can now chat with mind-blowing fast models from Groq AI.

- New: You can now chat with mind-blowing smart models from Anthropic AI.

- New: New code models: DeepSeek Coder, StarCoder2, and DolphinCoder.

- New: Text Module service: Updated to the latest version.

- New: New look for the bulk chat session actions UI.

- Improve: Overall app performance improvements with lower CPU usage and a huge improvement in typing latency.

- Improve: Save and restore sync state of a chat session in split chat mode.

- Improve: Allow to show all hidden chats.

- Improve: Move message options such as copy, split, etc, to the bottom of its message.

- Improve: Swap the location of image attachment and quick prompt buttons.

- Improve: Text Module service: Improved LLaVA model response and performance.

- Improve: Text Module service: Better determination of VRAM on macOS

- Fix: Text Module service: Issue with Local Service hanging when switching models repeatedly.

- Fix: Model options pane scrolling issue.

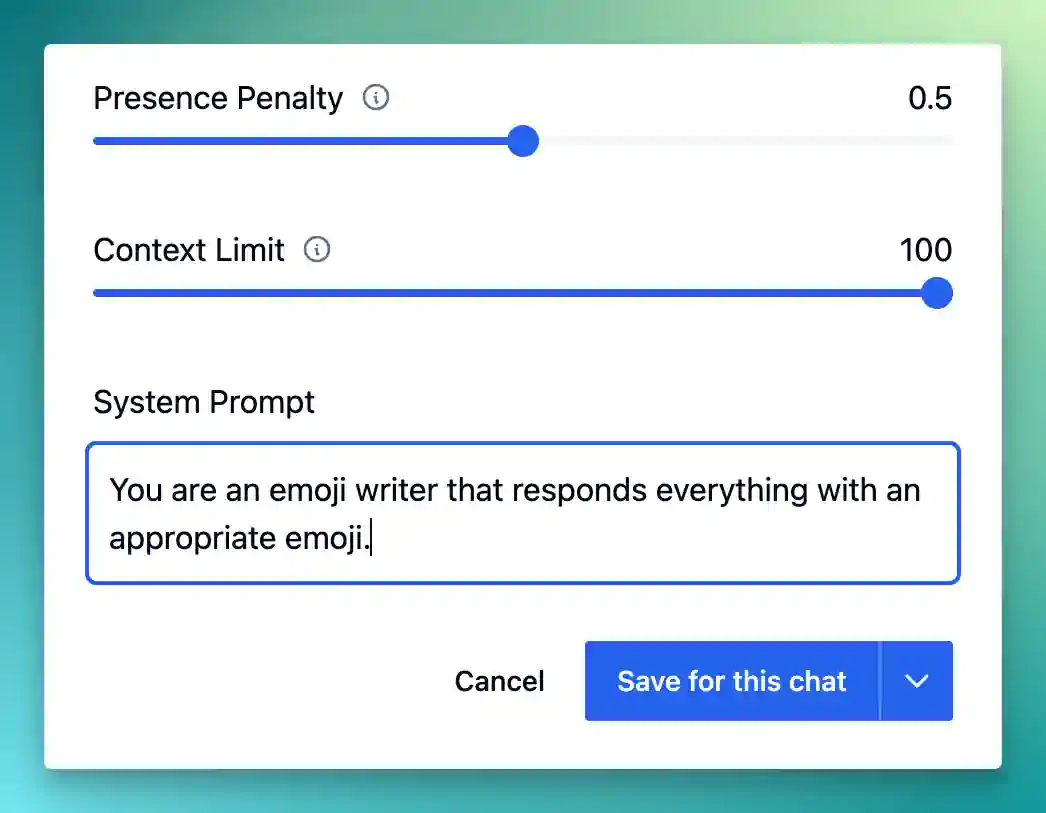

- Fix: Save for this chat option was saving configs globally.

- Misc: UI/UX improvements.

v0.3

March 1, 2024

- EPIC: 🌋 LLaVA model. You can chat with your images now! Read more on our blog.

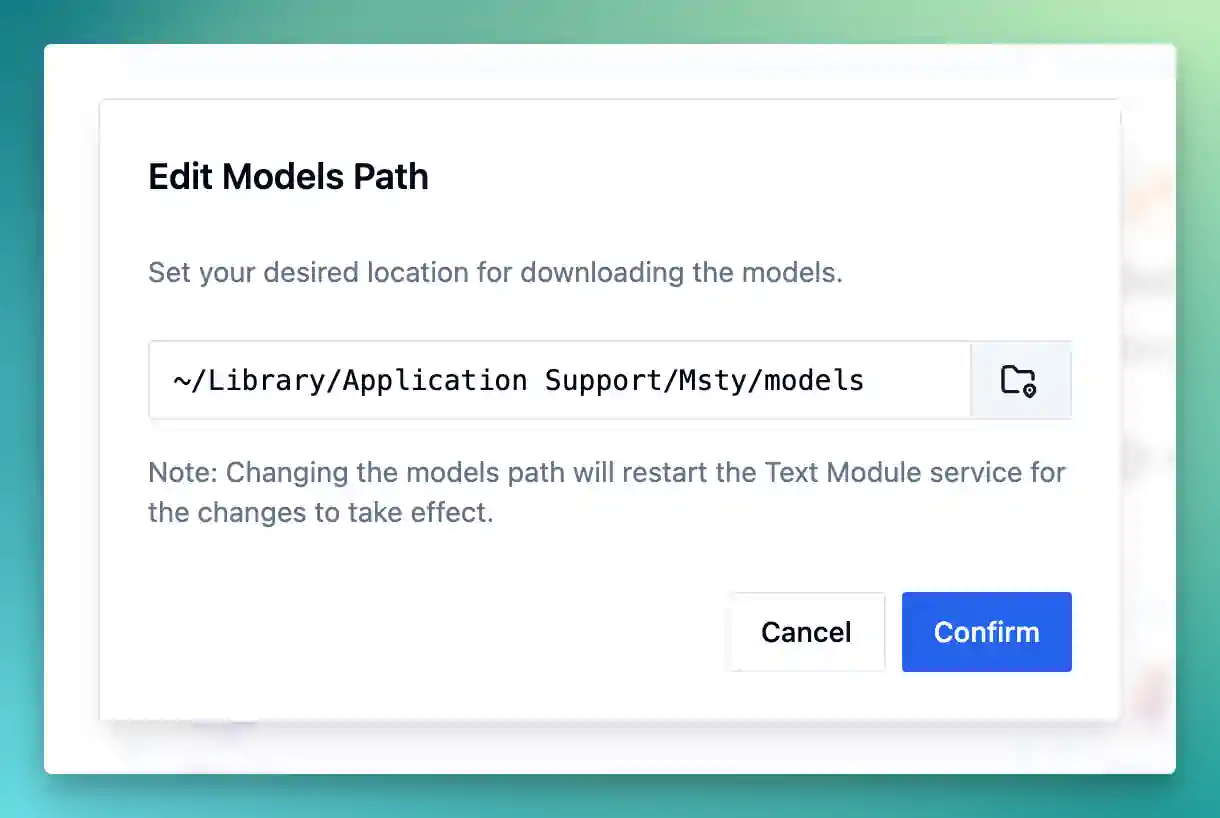

- EPIC: Auto Ollama models location detection including OLLAMA_MODELS env variable, if defined. We now allow to

change the defaults models location as well.

- EPIC: Msty is now available on Linux. 🎉 Both AppImage and .deb packages are available for download. AppImage

comes with auto update but .deb doesn't.

Linux is experimental at this time. Please talk to us on Discord if you run into any issues.

- New: Allow to add system prompt. You can set it per chat or globally per model.

- New: Revamped prompt library with a new UI, showing some prompts when starting a chat and a dedicated bookmarked prompt section.

- New: Add support for Gemini models from Google.

- New: Allow to clear recent prompts as well as a way to disable saving new prompts.

- New: Latest models definitions and latest Msty Local service including Mistral Large.

- New: Check the health status of Text Module service and get the port address it is running on. This makes it easy to connect Msty from other services.

- Improve: Scroll to bottom of chat when loading it.

- Improve: Focus message input box after selecting a model.

- Improve: Fix the download models icon.

- Fix: Bookmarked prompts are not saved.

- Fix: Double enter sends empty message.

- Fix: Massive performance improvements when loading a chat session. When testing internally, a chat with 300 messages took less than 3 seconds where before it was taking about 2 and a half minutes 🤯

- Fix: Bring back some missing menu items, such as Hide, Hide Others, etc. on MacOS

- Fix: Formatting of the chat messages giving them a more breathing space.

- Fix: Enter from numeric keypad doesn't submit a message rather adds a new line.

- Misc: UI/UX improvements.

v0.2

Feb 19, 2024

- New: Gemma model from Google DeepMind

- New: Refine AI generated output using custom prompt

- New: One-click modification of AI output using pre-defined prompts

- New: Chat refinement revisions with a sleek navigation for switching between refined messages

- New: Windows GPU (CUDA) installer including the latest CUDA drivers; no more manual installation

- New: Chat model is now loaded for 10 minutes in memory for faster response

- New: One-key shortcut to message actions such as D to delete a message when your cursor is on the message

- Improve: Deleting a message doesn't reload the chat conversation but only removes the message from the chat

- Improve: Sidebar navigation is now more intuitive and less jarring

- Fix: Output message isn't formatted properly if it contains an unordered list

- Fix: An exception when chat models generate language that is not supported by the app's code highlighter

- Fix: A couple of issues with conversation fork and split

- Fix: Disable title edit when a model isn't available

- Misc: UI/UX improvements

v0.1

Feb 17, 2024

- New: Windows support with GPU

- New: Prompts library with placeholders

- New: Sticky prompt

- New: Split conversation to a new chat session

- New: Fork chat session

- New: Delete a message from a chat session

v0.0.4

Jan 29, 2024

- New: Organize chat sessions into folders

- Create, edit, and delete folders

- Drag and drop chat sessions to move them to a different folder

- New: Merge chat sessions into a new session

- New: Chat sessions bulk actions

- Select multiple sessions using shift (⇧) or cmd (⌘) keys

- Bulk delete, merge, and move

- New: Remember the last used model

- New: Recent chat input history for pasting convenience

- New: Edit chat session title

- Generate contextual titles using AI

- New: Delete chat from a session

- New: Chat split panel for resizing chats in a multi-chat session

- New: Auto expand chat input box

- New: Remember pinned sidebar state

- Double-click on the app nav bar or press ⌘1 to toggle

- New: Copy user message

- New: Latest Text Module service

- This requires users to manually update the Text Module service under Local AI

- New: Local AI text chat models (Tiny Dolphin, Qwen, and DuckDB NSQL)

- New: In-app changelog

- Fix: Chat history actions when the sidebar is hovered during a collapsed state

- Fix: In-app discord invite link

v0.0.3

Jan 10, 2024

- Fix: Model logos

v0.0.2

Jan 10, 2024

- New: Configurable text chat parameters

- New: Local AI text chat models (Mixtral 8x7B, Vicuna, Mistral Openorca, etc.)

- New: Submit chat on enter

- New: Latest Text Module service

- This requires users to manually update the Text Module service under Local AI

- This requires users to manually update the Text Module service under Local AI

- Fix: Message streaming flashes

- Fix: App window drag issue

v0.0.1

Jan 3, 2024

- New: Initial release