The easiest way to use local and online AI models

Without Msty: painful setup, endless configurations, confusing UI, Docker, command prompt, multiple subscriptions, multiple apps, chat paradigm copycats, no privacy, no control.

With Msty: one app, one-click setup, no Docker, no terminal, offline and private, unique and powerful features.

Using local AI models for free like the new Reasoning model from Deepseek is just a step away!

"I just discovered Msty and I am in love. Completely. It’s simple and beautiful and there’s a dark mode

and you don’t need to be super technical to get it to work. It’s magical!" - Alexe

Offline-First, Online-Ready

Msty is designed to function seamlessly offline, ensuring reliability and privacy. For added flexibility, it also supports popular online model vendors, giving you the best of both worlds.

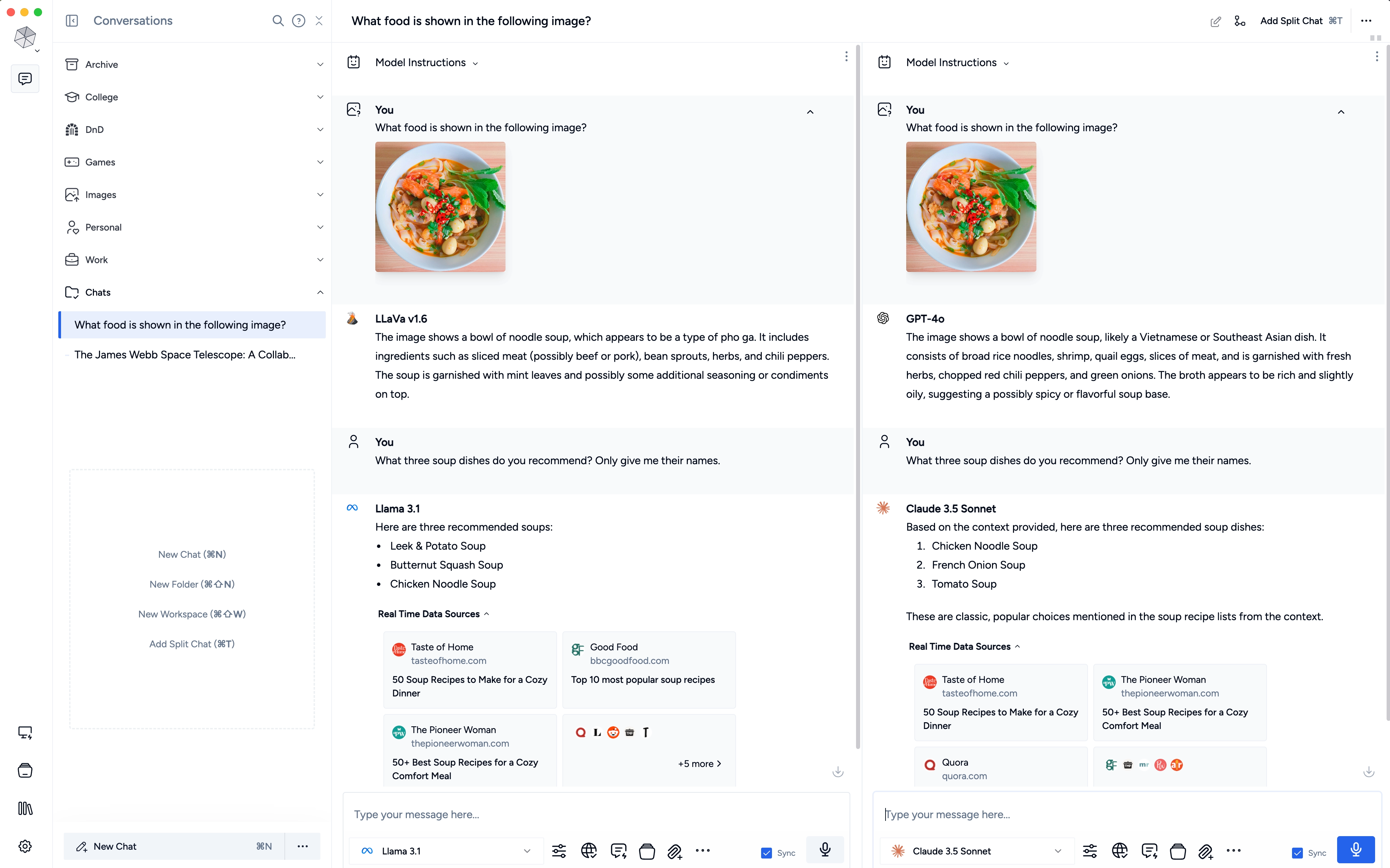

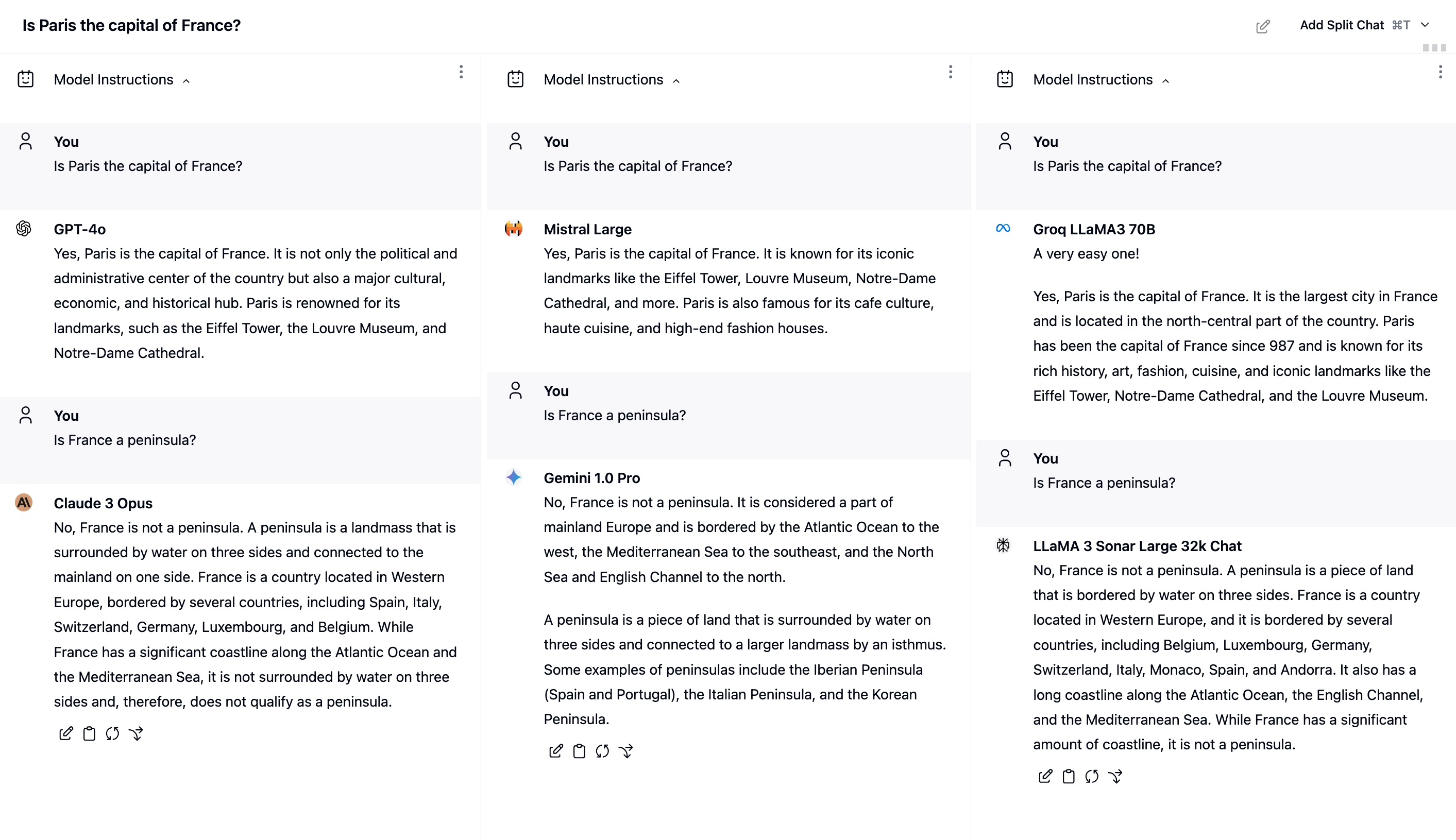

Parallel Multiverse Chats

Revolutionize your research with split chats. Compare and contrast multiple AI models' responses in real-time, streamlining your workflow and uncovering new insights.

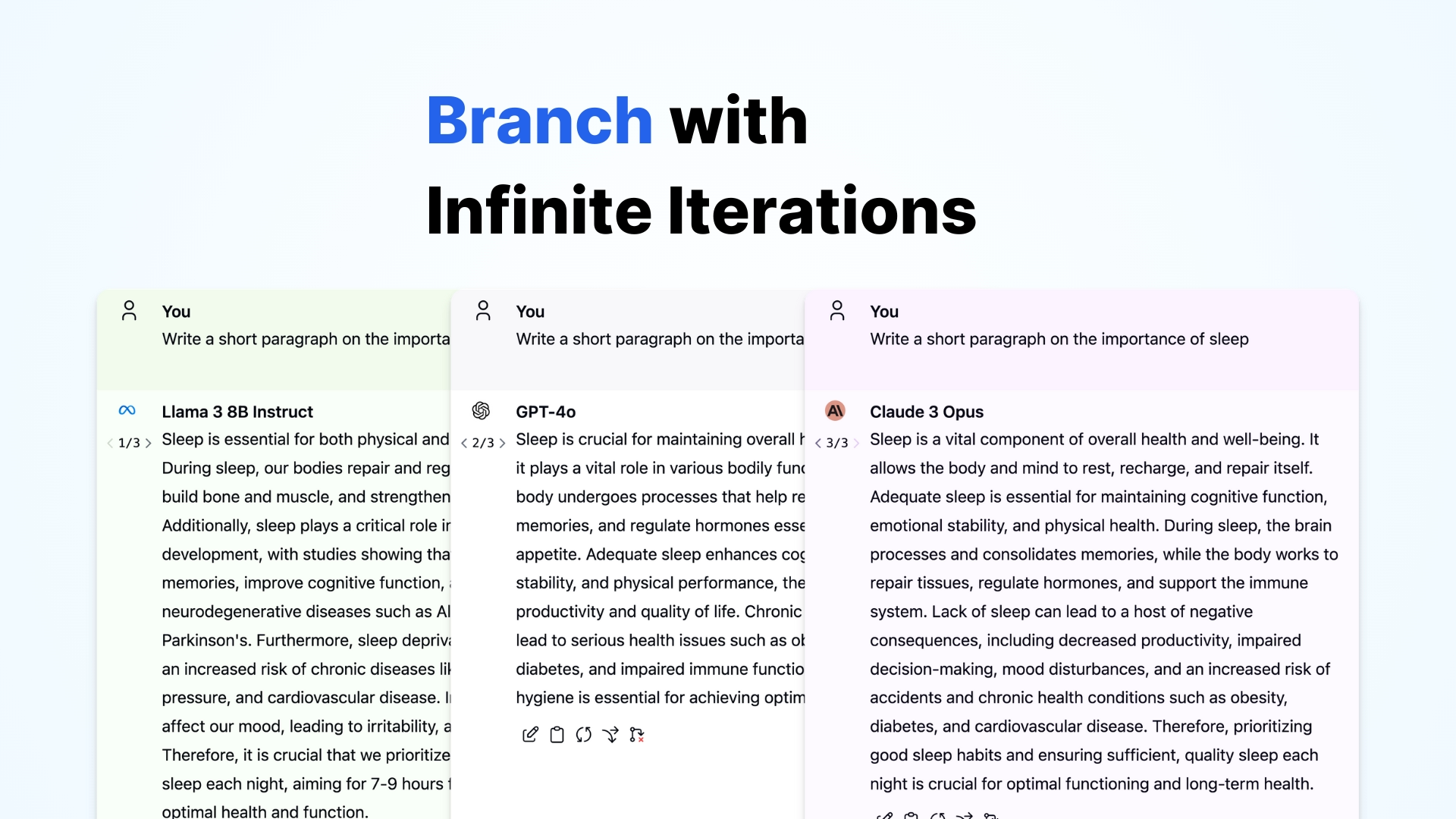

Craft your conversations

Msty puts you in the driver's seat. Take your conversations wherever you want,

and stop whenever you're satisfied.

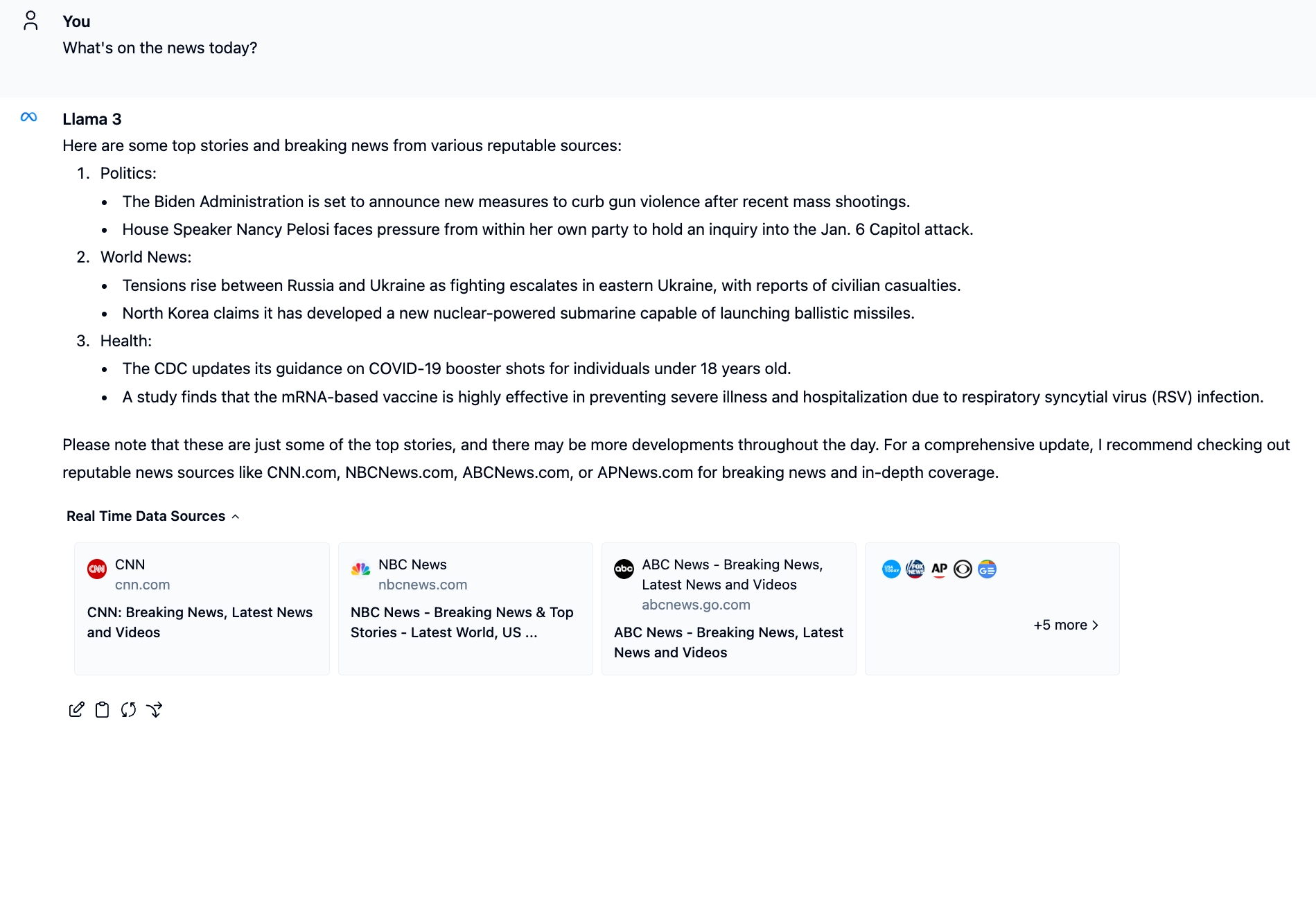

Summon Real-Time Data

Stay ahead of the curve with our web search feature. Ask Msty to fetch live data into your conversations for unparalleled relevance and accuracy.

RAG done Right.

Msty's Knowledge Stack goes beyond a simple document collection.

Leverage multiple data sources to build a comprehensive information stack.

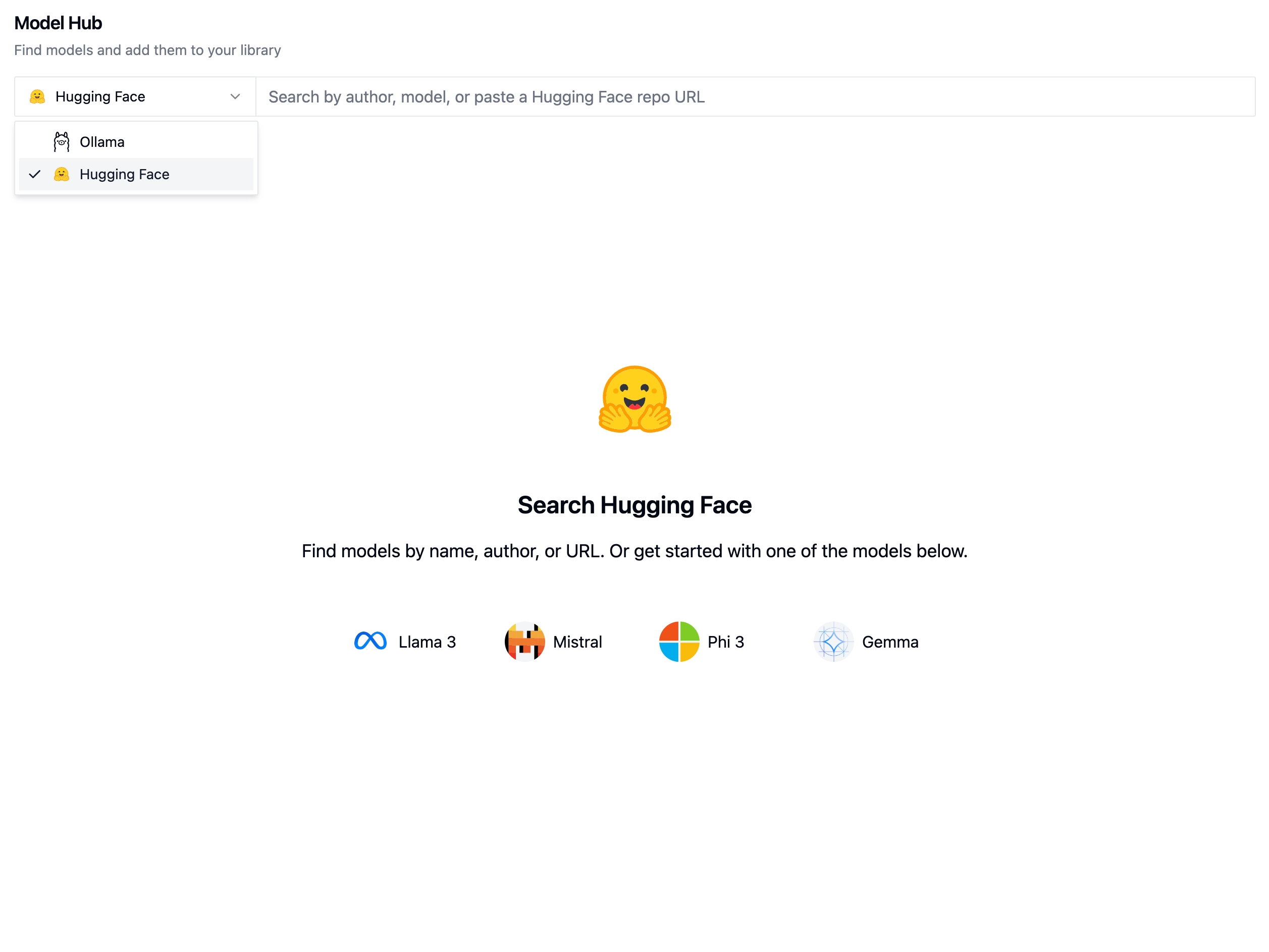

Unified Access to Models

Use any models from Hugging Face, Ollama and Open Router. Choose the best model

for your needs and seamlessly integrate it into your conversations.

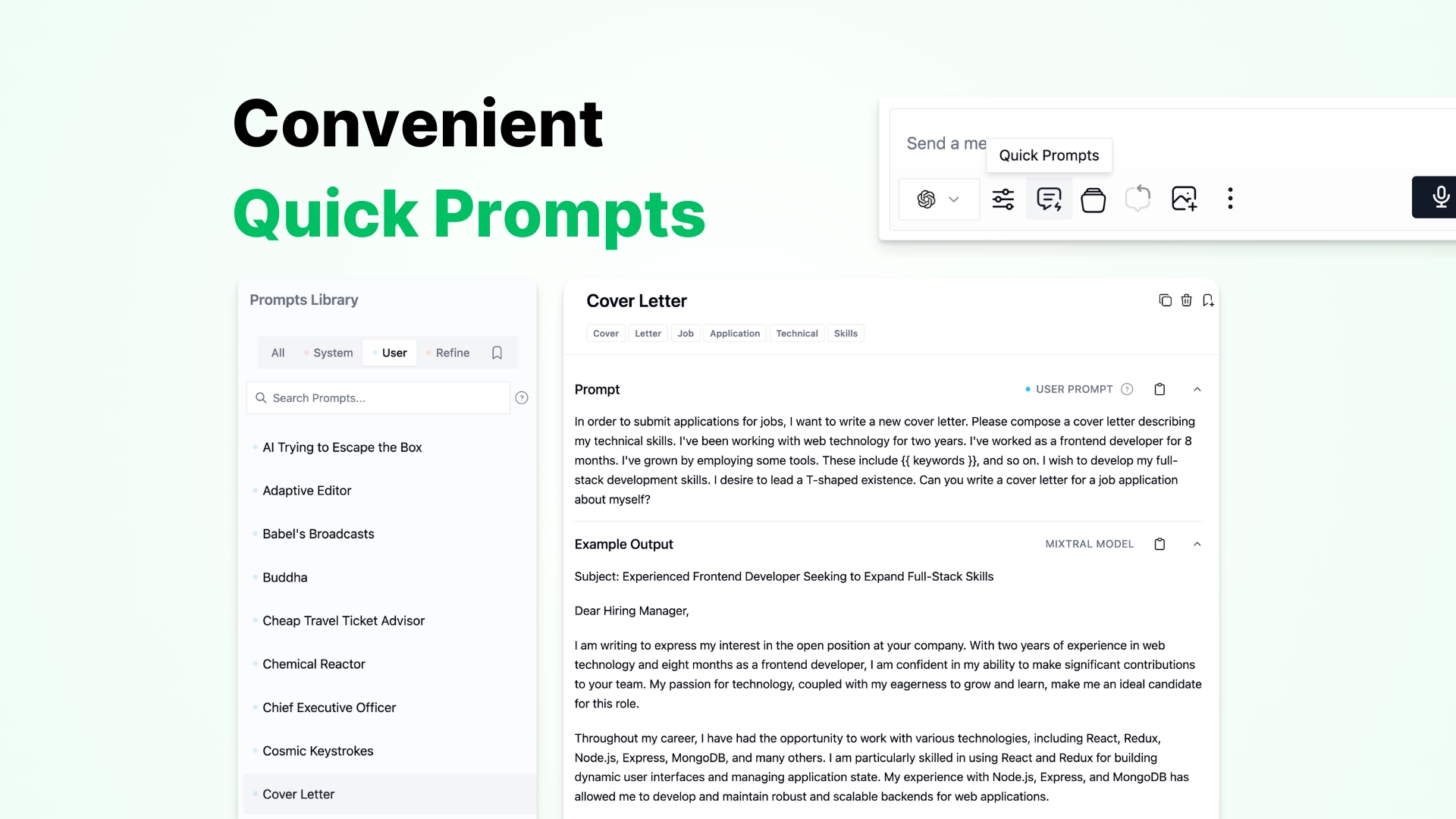

Prompt Paradise

Access a ready-made library of prompts to guide the AI model, refine responses,

and fulfill your needs. You can also add your own prompts to the library.

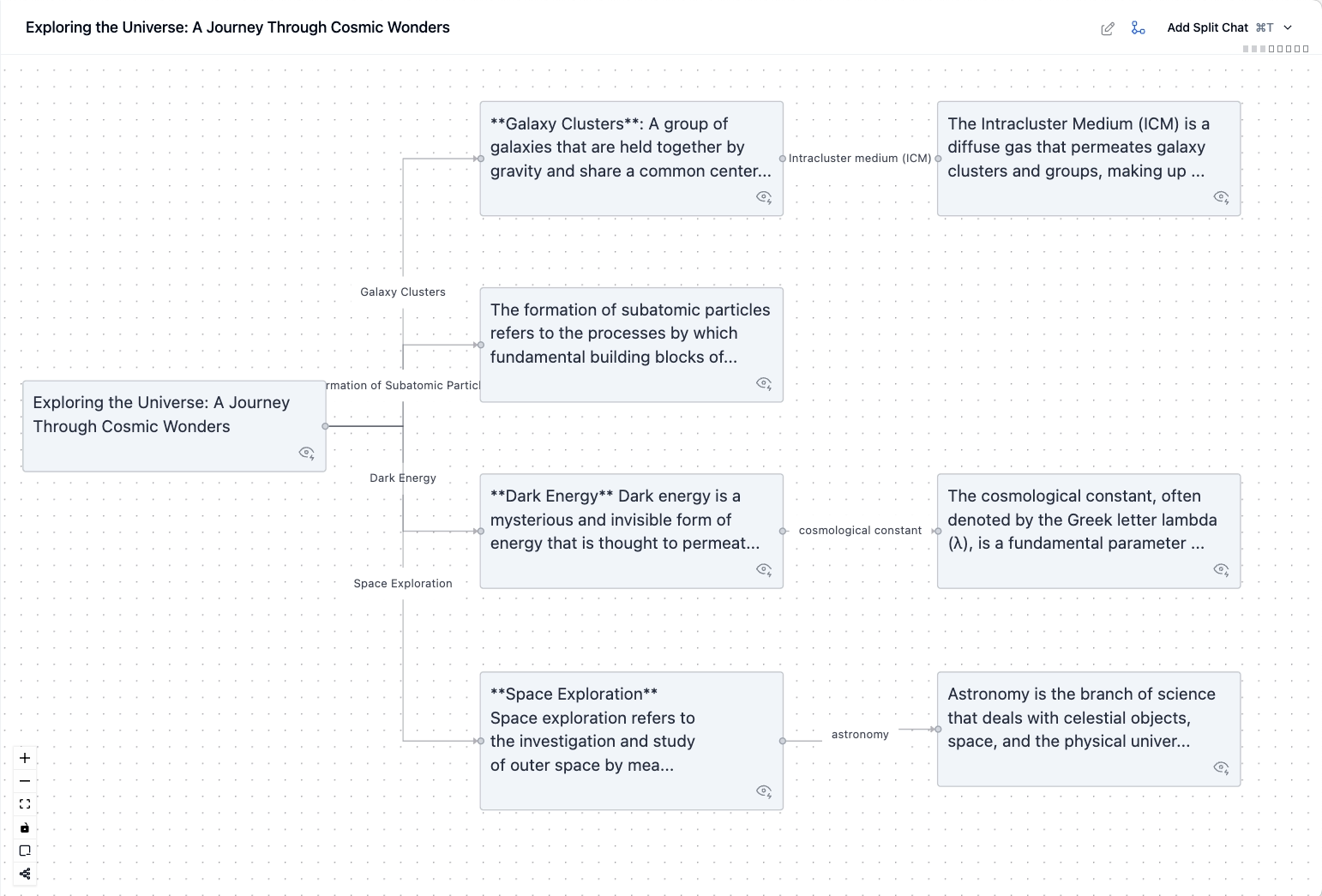

Discover & Visualize

Get ready to converse, delve, and flow! Our dynamic duo of features combines

the power of delve mode's endless exploration with Flowchat™'s intuitive visualization.

Getting lost in the rabbit holes of knowledge discovery has never been more fun!

And many more features...

Ollama Integration

Use your existing models with Msty

Ultimate Privacy

No personal information ever leaves your machine

Offline Mode

Use Msty when you're off the grid

Folders

Organize your chats into folders

Multiple Workspaces

Isolate data into their own spaces and sync across devices

Attachments

Attach images and documents to your chat

See what people are saying

Yes, these are real comments from real users. Join our Discord to say hi to them.

Founding member of the Ollama team

Questions you may have

Ready to get started?

We are available in Discord if you have any questions or need help.